The nonprofit sector has traditionally thrived on resourcefulness—maximizing limited budgets, stretching every donation, and finding innovative approaches to address complex social challenges. As artificial intelligence reshapes industries across the global economy, nonprofit organizations now stand at a critical crossroads: strategically integrate AI into their operations or risk diminishing their impact in an increasingly digital world.

The promise of AI for nonprofits is substantial. From revolutionizing fundraising strategies to streamlining administrative operations and dramatically expanding community outreach, AI offers tools that could fundamentally transform how nonprofits pursue their missions. However, this technological revolution brings legitimate concerns about algorithmic bias, environmental consequences, ethical implications, and the potential decrease of human connection that lies at the heart of nonprofit work.

This raises questions for nonprofit leaders: Is AI truly a transformative force for greater impact, or merely another technological trend that carries more risks than rewards? How could resource-constrained organizations responsibly navigate AI adoption while staying true to their core values and mission?

The reality is that AI has already permeated the nonprofit landscape, with pioneering organizations demonstrating how thoughtful implementation can enhance—rather than detract from—their impact. This guide explores the current state of AI in the nonprofit sector, examines the multifaceted challenges it presents, and offers a framework for ethical, mission-aligned adoption.

The Current Landscape: How AI Is Already Transforming Nonprofit Work

Forward-thinking nonprofits aren’t simply deploying AI as a cost-cutting measure—they’re using it to reimagine their approaches to complex social challenges and expand their reach in previously impossible ways:

Environmental Protection and Monitoring

WattTime exemplifies the transformative potential of AI in environmental advocacy. Their technology analyzes satellite imagery to monitor greenhouse gas emissions from power plants worldwide in real-time. This capability—infeasible through manual means—enables unprecedented environmental accountability and provides regulators, researchers, and activists with crucial data to drive policy change. Their “Automated Emissions Reduction” software uses predictive algorithms to help renewable energy users automatically time their electricity use to coincide with cleaner energy generation.

Human Rights Protection

DeliverFund represents a paradigm shift in combating human trafficking. Their AI systems analyze vast quantities of online data to identify patterns indicative of trafficking operations, providing law enforcement with actionable intelligence that has directly contributed to successful interventions. Their platform can process information across multiple websites, forums, and classified ads simultaneously—work that would require thousands of human hours—to recognize trafficking networks and identify victims in need of assistance.

Workforce Development

Bayes Impact‘s “Bob” exemplifies AI’s potential to democratize access to crucial services. This AI-driven career coaching platform analyzes labor market trends to provide personalized employment advice to job-seekers who might otherwise lack access to professional career guidance. The system learns from each interaction to improve its recommendations, offering specific, actionable steps for professional development tailored to individual circumstances and local economic conditions.

Humanitarian Aid and Crisis Response

Tarjimly demonstrates how AI can overcome critical communication barriers in humanitarian settings. Their platform connects refugees and aid workers with volunteer translators through an AI-powered system that matches language needs with available translators, facilitating communication in crisis situations. The technology incorporates natural language processing to improve translation quality and manage requests efficiently across time zones and geographical boundaries.

Direct Service Delivery

GiveDirectly has revolutionized disaster response through AI-powered identification of affected households. Their systems analyze satellite imagery before and after natural disasters to identify damaged structures, allowing for faster and more precise cash aid distribution to those most in need. This technology has dramatically reduced assessment time from weeks to hours and enabled assistance to reach communities that might otherwise have been overlooked due to their remote location or lack of political influence.

Education and Learning Support

Khan Academy‘s Khanmigo represents a significant advancement in personalized education technology. This AI tutor adapts to individual learning styles and paces, providing customized support that supplements traditional classroom instruction. The system identifies knowledge gaps, offers targeted explanations, and adjusts difficulty levels in real-time, making quality education more accessible globally, particularly for students without access to individual tutoring.

In Malawi, Opportunity International introduced “Ulangizi,” a generative AI chatbot that offers agricultural advice in the local language, Chichewa. They also developed AI-based tools to assist teachers and school leaders, aiming to improve education in underserved communities. – Time

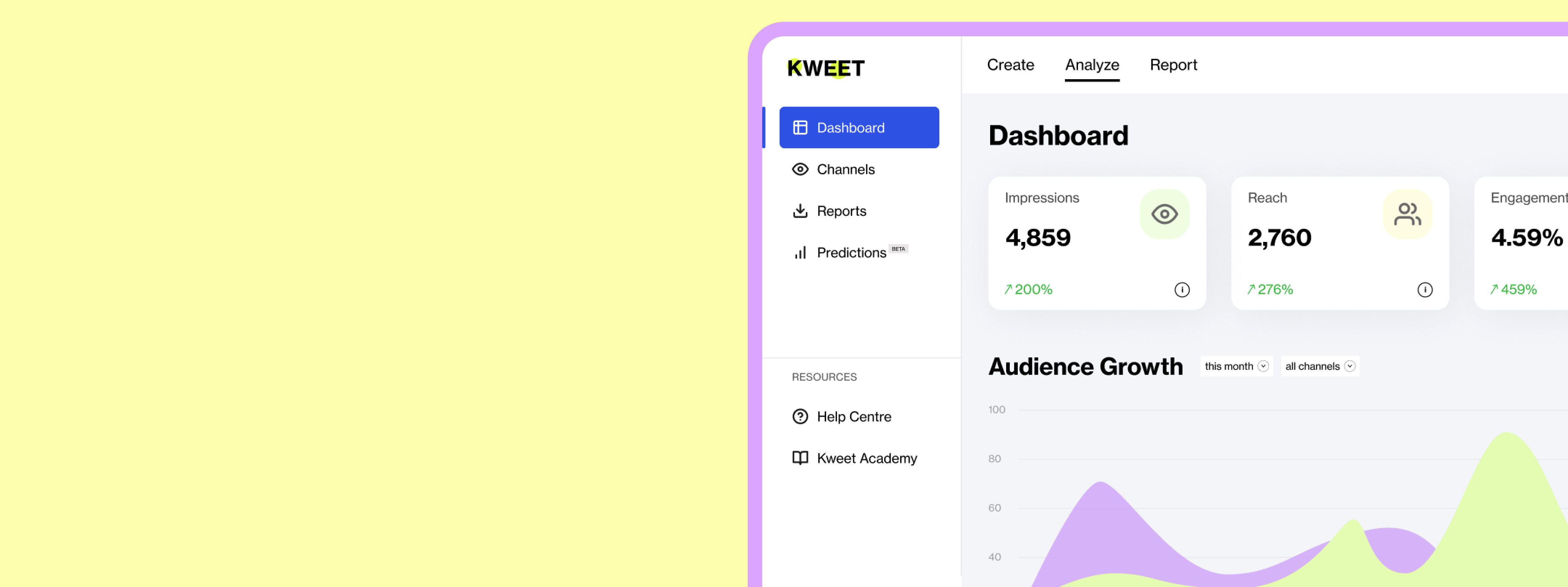

Marketing and Communications

Teach for Mexico uses Kweet to ideate and create social media and campaign content, helping them cut content creation time by 80% and giving their team time to focus on outreach and community engagement.

These examples illustrate that AI in the nonprofit context isn’t just about operational efficiency—though that benefit certainly exists. Rather, these organizations are using AI to fundamentally expand their capabilities, reach underserved populations more effectively, and address systemic challenges at scale.

Navigating AI Bias, Ethics, and Sustainability

Despite its promise, AI implementation in the nonprofit sector requires careful consideration of several critical challenges:

1. Algorithmic Bias: Ensuring Equitable Impact

The data-dependent nature of AI creates significant equity concerns for mission-driven organizations. AI systems learn from historical data, which often reflects and encodes societal inequities and structural biases. For nonprofits committed to social justice and equitable service delivery, unexamined AI implementation could undermine core values.

Potential manifestations of bias in nonprofit contexts:

- Grant distribution algorithms might systematically favor established organizations with robust digital infrastructure and extensive documentation over grassroots movements working in marginalized communities with less digital presence.

- Beneficiary selection systems could prioritize individuals who fit patterns recognized from historical data, potentially overlooking those with unusual or complex needs who don’t match established profiles.

- Donor targeting algorithms might disproportionately focus outreach on demographics with historically higher giving capacity, neglecting potential supporters from diverse backgrounds and perpetuating funding imbalances.

- Program evaluation tools that rely on standard metrics may fail to capture the nuanced impact of culturally-specific approaches or innovative methodologies that don’t align with conventional outcome measures.

Strategies for mitigating algorithmic bias:

- Conduct regular bias audits of AI systems in use, particularly at decision points that affect resource allocation, program admission, or service delivery.

- Diversify data sources to ensure AI systems learn from experiences representing the full spectrum of communities served.

- Implement “fairness constraints” in algorithms that explicitly account for historical disadvantages and adjust recommendations accordingly.

- Establish clear human oversight protocols for all AI-informed decisions, particularly those with significant consequences for individuals or communities.

- Partner with affected communities to establish appropriate metrics for evaluating both program outcomes and the equity impact of AI systems themselves.

2. Environmental Considerations: Aligning Technological and Ecological Values

The environmental footprint of AI represents a particular concern for nonprofits with sustainability missions. Training large language models and running sophisticated AI systems requires enormous computing resources, resulting in significant energy consumption and associated carbon emissions.

For example, training a single large language model can generate carbon emissions equivalent to the lifetime emissions of five average American cars. This presents an ethical dilemma for environmentally-focused organizations: how to balance the operational benefits of AI with its ecological costs.

Approaches to environmentally responsible AI adoption:

- Calculate the AI carbon footprint of current and planned systems to make informed decisions about which applications justify their environmental impact.

- Select cloud providers with demonstrated commitments to renewable energy and carbon offset programs for AI operations.

- Adopt “AI minimalism” by employing simpler, more energy-efficient models when complex systems aren’t necessary for the task at hand.

- Explore edge computing options that process data locally rather than in energy-intensive data centers when appropriate.

- Advocate for industry-wide sustainability standards in AI development and deployment, leveraging the collective voice of the nonprofit sector.

3. The Ethical Concerns of AI-Enhanced Fundraising

Fundraising represents one of the most immediately applicable areas for AI in nonprofits. Machine learning can analyze donor histories to predict giving patterns, optimize campaign timing, personalize appeals, and identify potential major donors who might otherwise be overlooked. However, these capabilities raise important ethical questions about transparency, authenticity, and respect for donor autonomy.

Ethical considerations in AI-powered fundraising:

- Persuasion vs. manipulation: When does personalized outreach cross the line from effective communication to manipulation based on psychographic profiling?

- Privacy boundaries: How much donor data is appropriate to collect, analyze, and incorporate into outreach strategies?

- Transparency obligations: Do donors have a right to know when their giving history is being analyzed by algorithms to inform future solicitations?

- Authenticity of relationship: Can donor relationships remain genuine when communications are increasingly driven by predictive analytics?

Guidelines for ethical AI fundraising:

- Develop and publish an AI ethics policy specifically addressing fundraising practices and data usage policies.

- Provide opt-out mechanisms for donors who prefer not to have their data used in predictive modeling.

- Balance automation with authentic human connection, particularly for major donors and long-term supporters.

- Establish clear internal boundaries on acceptable uses of predictive analytics in donor communications.

- Regularly review automated messaging to ensure it accurately reflects organizational values and voice. At Kweet, we advocate for a human in the loop approach where no AI can publish something before it’s approved by a team member.

New Frontiers: Additional Considerations for Nonprofits Exploring AI

Several additional considerations are getting attention from nonprofit leaders:

4. Data Privacy and Security: Protecting Vulnerable Communities

Nonprofits often work with sensitive information about vulnerable populations. The integration of AI systems—which typically require large datasets to function effectively—creates new privacy and security concerns.

Critical privacy considerations:

- Vulnerable populations data: Organizations serving marginalized communities must take extraordinary precautions with data that could potentially expose individuals to discrimination or harm if misused or breached.

- Cross-border data flows: International nonprofits must navigate complex and sometimes conflicting privacy regulations across jurisdictions.

- Informed consent challenges: Obtaining meaningful informed consent for AI data usage from beneficiaries with limited digital literacy or in crisis situations requires careful protocol development.

Recommended approaches:

- Implement privacy-by-design principles in all data collection and AI systems, minimizing data collection to what’s strictly necessary.

- Develop tiered access protocols that limit which staff can access different types of sensitive information.

- Invest in robust encryption and security measures, even when working with limited technology budgets.

- Create clear data retention policies that limit how long personal information is stored.

- Explore federated learning approaches that allow AI models to learn from data without centralizing sensitive information.

5. The Digital Divide: Ensuring AI Adoption Doesn’t Increase Inequities

As nonprofits adopt increasingly sophisticated technological solutions, there’s a risk of widening the gap between well-resourced organizations and smaller, community-based groups—potentially replicating the very inequities many nonprofits seek to address.

Manifestations of the nonprofit digital divide:

- Resource disparities between large, established organizations and smaller, grassroots nonprofits affect their ability to implement and benefit from AI.

- Geographic inequities in technology infrastructure and expertise can disadvantage rural and Global South organizations.

- Linguistic biases in available AI tools may limit their utility for organizations working in non-dominant languages.

Strategies for addressing the nonprofit digital divide:

- Establish AI resource-sharing collectives among nonprofits with complementary missions to distribute technology costs and expertise.

- Develop AI capacity-building programs specifically designed for small and under-resourced organizations.

- Advocate for foundation funding dedicated to equalizing AI access across the sector.

- Create simplified, accessible AI tools specifically designed for nonprofit use cases that don’t require extensive technical expertise.

- Build peer learning networks to share knowledge and best practices around appropriate AI implementation and adoption.

6. Mission Alignment: Ensuring AI Serves Core Values

Perhaps the most fundamental consideration for nonprofits exploring AI is ensuring that technological adoption remains in service to—rather than distracting from—their core mission and values.

Key questions for assessing mission alignment:

- Does this AI application directly advance our primary mission, or is it primarily addressing operational concerns?

- How might this technology change our relationship with the communities we serve?

- Does this application reinforce or potentially undermine our organizational values?

- What resources will be redirected from direct service to technology implementation and maintenance?

Approaches to maintaining mission integrity:

- Develop an AI ethics committee including board members, staff, and community representatives to evaluate proposed applications.

- Establish clear evaluation metrics that assess both operational benefits and mission advancement for each AI initiative.

- Create feedback mechanisms for staff and beneficiaries to identify concerns about technology impact on service quality.

- Regularly revisit and refine the organization’s technology strategy to ensure continued alignment with evolving mission priorities.

Implementation Framework: A Roadmap for Responsible AI Adoption

For nonprofits ready to explore AI integration, a structured approach can maximize benefits while minimizing risks:

Phase 1: Organizational Readiness Assessment

Before implementing specific AI solutions, nonprofits should evaluate their current technological infrastructure, data management practices, and organizational culture:

- Data inventory and quality assessment: Document what data you currently collect, how it’s stored, its quality and completeness, and existing data governance policies.

- Staff capability mapping: Identify existing technology skills and knowledge gaps among staff and leadership.

- Cultural readiness evaluation: Assess organizational attitudes toward technology adoption and change readiness.

- Resource allocation planning: Determine realistic technology budget parameters and potential funding sources for AI initiatives.

Phase 2: Strategic Application Identification

Rather than adopting AI broadly, identify specific high-value applications aligned with organizational priorities:

- Process mapping: Document key organizational processes to identify inefficiencies and bottlenecks that technology might address.

- Impact-to-effort matrix: Evaluate potential AI applications based on implementation difficulty versus potential mission impact.

- Risk assessment: Analyze each potential application for privacy, equity, and ethical concerns.

- Pilot selection: Choose 1-2 applications with favorable impact-to-effort ratios and manageable risk profiles for initial implementation.

Phase 3: Implementation and Integration

When deploying selected AI solutions, emphasize:

- Start with augmentation, not automation: Begin by using AI to enhance human decision-making rather than replacing it.

- Establish clear success metrics: Define specific, measurable outcomes that constitute success for each AI initiative.

- Create robust feedback loops: Develop mechanisms for ongoing evaluation and adjustment based on staff and beneficiary input.

- Document and share learnings: Maintain detailed records of implementation challenges and solutions to inform future projects.

Phase 4: Ongoing Governance and Evaluation

Establish structures for continuing oversight and improvement:

- Regular ethical reviews: Schedule periodic assessments of AI systems to identify emerging bias or other concerns.

- Impact audits: Evaluate whether AI implementations are achieving their intended benefits without creating unintended consequences.

- Stay current on best practices: Designate responsibility for monitoring evolving standards and regulations in AI ethics.

- Community accountability: Create mechanisms for the communities you serve to provide input on how technology is affecting service quality and accessibility.

Final Thoughts: AI as a Mission Multiplier

For nonprofits, AI represents neither a panacea nor a passing trend to be ignored. Rather, it offers a powerful set of tools that—when thoughtfully implemented—can dramatically enhance mission impact. The key distinction between organizations that will benefit from AI and those that may be harmed by it lies in the approach to adoption.

Nonprofits that view AI purely as a cost-cutting measure or adopt it haphazardly risk undermining their missions and values. In contrast, organizations that approach AI strategically, with clear ethical frameworks and a commitment to ongoing evaluation, can leverage these technologies to extend their reach and deepen their impact.

The most successful nonprofit adopters of AI will be those that:

- Maintain focus on mission alignment in all technology decisions

- Center the needs and perspectives of the communities they serve

- Approach AI as a complement to—not a replacement for—human judgment and connection

- Commit to ongoing learning and adaptation as both the technology and its implications evolve

With this balanced approach, nonprofits can use AI’s transformative potential while remaining true to the human-centered values that define the sector. The future of nonprofit work isn’t purely technological or exclusively human—it’s a thoughtful integration of both that amplifies each organization’s unique contribution to creating a more just and equitable world.